Python Programming

Lecture 12 Data Cleaning, Automation and Word Cloud

12.1 Data Cleaning (数据清洗)

-

During the course of doing data analysis and modeling, a significant amount of time is spent on data preparation: loading, cleaning, transforming, and rearranging. Such tasks are often reported to take up 80% or more of an analyst's time.

-

In pandas, we've adopted a convention used in the R programming language by referring to missing data as NA, which stands for not available. In statistics applications, NA data may either be data that does not exist or that exists but was not observed.

-

When cleaning up data for analysis, it is often important to do analysis on the missing data itself to identify data collection problems or potential biases in the data caused by missing data.

Filtering Out Missing Data

import pandas as pd

from numpy import nan as NA

data = {'state': ['Ohio', 'Ohio', NA, 'Nevada', 'Nevada', 'Nevada'],

'year': [2000, 2001, 2001, 2002, 2002, 2003],

'pop': [1.5, 1.7, 3.6, 2.4, 2.9, NA]}

frame = pd.DataFrame(data)

>>> frame

state year pop

0 Ohio 2000 1.5

1 Ohio 2001 1.7

2 NaN 2001 3.6

3 Nevada 2002 2.4

4 Nevada 2002 2.9

5 Nevada 2003 NaN

>>> frame.info()

RangeIndex: 6 entries, 0 to 5

Data columns (total 3 columns):

state 5 non-null object

year 6 non-null int64

pop 5 non-null float64

dtypes: float64(1), int64(1), object(1)

memory usage: 272.0+ bytes

>>> frame.dropna()

state year pop

0 Ohio 2000 1.5

1 Ohio 2001 1.7

3 Nevada 2002 2.4

4 Nevada 2002 2.9

>>> frame['debt']=NA

>>> frame

state year pop debt

0 Ohio 2000 1.5 NaN

1 Ohio 2001 1.7 NaN

2 NaN 2001 3.6 NaN

3 Nevada 2002 2.4 NaN

4 Nevada 2002 2.9 NaN

5 Nevada 2003 NaN NaN

>>> frame=frame.dropna(axis=1,how="all")

state year pop

0 Ohio 2000 1.5

1 Ohio 2001 1.7

2 NaN 2001 3.6

3 Nevada 2002 2.4

4 Nevada 2002 2.9

5 Nevada 2003 NaN

# only keep rows with at least 3 non-NaN values

>>> frame.dropna(thresh=3)

state year pop

0 Ohio 2000 1.5

1 Ohio 2001 1.7

3 Nevada 2002 2.4

4 Nevada 2002 2.9

Filling in missing data

>>> frame.fillna(0)

state year pop

0 Ohio 2000 1.5

1 Ohio 2001 1.7

2 0 2001 3.6

3 Nevada 2002 2.4

4 Nevada 2002 2.9

5 Nevada 2003 0.0

>>> frame.fillna({'state':'Ohio',

...: 'pop': 2})

state year pop

0 Ohio 2000 1.5

1 Ohio 2001 1.7

2 Ohio 2001 3.6

3 Nevada 2002 2.4

4 Nevada 2002 2.9

5 Nevada 2003 2.0

Removing Duplicates

>>> data = pd.DataFrame({'k1': ['one', 'two'] * 3 + ['two'],

....: 'k2': [1,3,2,3,3,4,4], 'v1':[0,1,2,3,4,5,5]})

>>> data

k1 k2 v1

0 one 1 0

1 two 3 1

2 one 2 2

3 two 3 3

4 one 3 4

5 two 4 5

6 two 4 5

>>> data.duplicated()

0 False

1 False

2 False

3 False

4 False

5 False

6 True

dtype: bool

>>> data.drop_duplicates()

k1 k2 v1

0 one 1 0

1 two 3 1

2 one 2 2

3 two 3 3

4 one 3 4

5 two 4 5

>>> data.drop_duplicates(['k1','k2'])

k1 k2 v1

0 one 1 0

1 two 3 1

2 one 2 2

4 one 3 4

5 two 4 5

# keep="last", False

Transforming Data

>>> data = pd.DataFrame({'food': ['bacon', 'pulled pork', 'bacon',

'Pastrami', 'corned beef', 'Bacon',

'pastrami', 'honey ham', 'nova lox'],

'ounces': [4, 3, 12, 6, 7.5, 8, 3, 5, 6]})

>>> data

food ounces

0 bacon 4.0

1 pulled pork 3.0

2 bacon 12.0

3 Pastrami 6.0

4 corned beef 7.5

5 Bacon 8.0

6 pastrami 3.0

7 honey ham 5.0

8 nova lox 6.0

>>> def f(x):

return x.lower()

>>> lowercased = data['food'].apply(f)

0 bacon

1 pulled pork

2 bacon

3 pastrami

4 corned beef

5 bacon

6 pastrami

7 honey ham

8 nova lox

>>> meat_to_animal = {'bacon': 'pig','pulled pork': 'pig',

'pastrami': 'cow','corned beef': 'cow',

'honey ham': 'pig','nova lox': 'salmon'}

>>> data['animal'] = lowercased.map(meat_to_animal)

food ounces animal

0 bacon 4.0 pig

1 pulled pork 3.0 pig

2 bacon 12.0 pig

3 Pastrami 6.0 cow

4 corned beef 7.5 cow

5 Bacon 8.0 pig

6 pastrami 3.0 cow

7 honey ham 5.0 pig

8 nova lox 6.0 salmon

Replacing Values

>>> data.replace("pig","pig-1")

food ounces animal

0 bacon 4.0 pig-1

1 pulled pork 3.0 pig-1

2 bacon 12.0 pig-1

3 Pastrami 6.0 cow

4 corned beef 7.5 cow

5 Bacon 8.0 pig-1

6 pastrami 3.0 cow

7 honey ham 5.0 pig-1

8 nova lox 6.0 salmon

>>> data.replace(["pig","cow"],"pig-2")

food ounces animal

0 bacon 4.0 pig-2

1 pulled pork 3.0 pig-2

2 bacon 12.0 pig-2

3 Pastrami 6.0 pig-2

4 corned beef 7.5 pig-2

5 Bacon 8.0 pig-2

6 pastrami 3.0 pig-2

7 honey ham 5.0 pig-2

8 nova lox 6.0 salmon

many to many, by dict

>>> data.replace({"pig":"pig-1","cow":"cow-1"})

Detecting and Filtering Outliers

>>> data[data['ounces']>=8]=NA

food ounces animal

0 bacon 4.0 pig

1 pulled pork 3.0 pig

2 NaN NaN NaN

3 Pastrami 6.0 cow

4 corned beef 7.5 cow

5 NaN NaN NaN

>>> data = data.dropna()

Computing Dummy Variables

>>> dummies = pd.get_dummies(data['animal'],dtype=int)

>>> data.join(dummies)

food ounces animal cow pig salmon

0 bacon 4.0 pig 0 1 0

1 pulled pork 3.0 pig 0 1 0

3 Pastrami 6.0 cow 1 0 0

4 corned beef 7.5 cow 1 0 0

6 pastrami 3.0 cow 1 0 0

7 honey ham 5.0 pig 0 1 0

8 nova lox 6.0 salmon 0 0 1

12.2 Office Automation (办公自动化)

操作Excel文件

import pandas as pd

db =pd.read_excel('db_top.xlsx')

# pd.read_excel('db_top.xlsx', sheet_name="Sheet1")

# pd.read_excel('db_top.xlsx', sheet_name=0, index_col=0, header=0, usecols=[0,2])

# 载入excel文件,sheet=0为第一个sheet,index_col指定某一列为index

# header=0指定某一行为header,uscecols提取哪几列

>>> db.columns

Index(['id', '全名', '导演', '类型', '摘要', '评分', '时长', '时间',

'年份', '演员', '投票数','发行信息', '中文名', '外文名'],dtype='object')

>>> genre = db[['中文名', '类型']].copy()

>>> genre

中文名 类型

0 星际穿越 剧情,科幻,悬疑,家庭,冒险

1 辛德勒的名单 剧情,历史,战争

2 唐伯虎点秋香 喜剧,爱情,古装

3 致命ID 剧情,悬疑,惊悚

4 指环王3:王者无敌 剧情,动作,奇幻,冒险

.. ... ...

all_g_list = []

for x in genre['类型']:

all_g_list.append(x.split(','))

genre["类型"] = all_g_list

# 单个电影

x = pd.DataFrame()

x['类型'] = genre.iloc[0]['类型']

x["中文名"] = genre.iloc[0]['中文名']

x['d']=1

x= pd.pivot_table(x,index='中文名', columns='类型',values='d')

>>> x

类型 冒险 剧情 家庭 悬疑 科幻

中文名

星际穿越 1 1 1 1 1

# 所有电影

genre_list = pd.DataFrame()

for i in range(len(genre)):

x = pd.DataFrame()

x['类型'] = genre.iloc[i]['类型']

x["中文名"] = genre.iloc[i]['中文名']

x['d']=1

x = pd.pivot_table(x,index='中文名', columns='类型',values='d')

genre_list=pd.concat([genre_list,x])

genre_list.fillna(0)

genre_list.to_csv('g.csv',encoding='utf-8-sig')

# 这是excel文件且包含中文,最好是加上 encoding='utf-8-sig'(Excel 读取不乱码)

We can run regression with those dummy variables and scores of movies.

pip install seaborn

import matplotlib.pyplot as plt

import seaborn as sns

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

director = db['导演'].value_counts()

d = director.head(10)

sns.barplot(y=(d.index), x=(d.values), palette="RdBu_r")

plt.savefig('director.pdf',dpi=300)

Saving to .xlsx

director.to_excel(excel_writer='director.xlsx', sheet_name="rank", encoding='utf-8')

# director.to_excel(excel_writer='director.xlsx', index=False, columns=[...], na_rep=0, inf_rep=0)

Multiple Sheets

excelpath = '...'

writer = pd.ExcelWriter(excelpath, engine="xlsxwriter")

df1.to_excel(writer, sheet_name="first")

df2.to_excel(writer, sheet_name="second")

df3.to_excel(writer, sheet_name="third")

writer.save()

Exercise

加载 “db_top” 数据集。按照影片时长对这些电影进行排序,展示时长最长的前 5 部电影以及时长最短的前 5 部电影。提示:用sort_values方法。

处理Word文件

Document 对象包含一个Paragraph对象的列表,表示文档中的段落(用户在Word 文档中输入时,如果按下回车,新的段落就开始了)。每个Paragraph对象都包含一个Run 对象的列表。

pip install python-docx

import docx # 不是python-docx

doc = docx.Document('example.docx')

len(doc.paragraphs)

doc.paragraphs[0].text

doc.paragraphs[1].text

doc.paragraphs[1].runs[0].text

doc.paragraphs[1].runs[1].text

doc.paragraphs[1].runs[2].text

doc.paragraphs[1].runs[3].text

def getText(filename):

doc = docx.Document(filename)

fullText = []

for para in doc.paragraphs:

fullText.append(para.text)

return '\n'.join(fullText)

print(getText('example.docx'))

写入word文件

import docx

doc = docx.Document()

doc.add_paragraph('Hello world!')

paraObj1 = doc.add_paragraph('This is a second paragraph.')

paraObj2 = doc.add_paragraph('This is a yet another paragraph.')

paraObj1.add_run(' This text is being added to the second paragraph.')

doc.save('multipleParagraphs.docx')

获取其他元素

# 标题也是段落,只是 style 不一样(Heading 1、Heading 2 …)

# 获取所有一级标题

for p in doc.paragraphs:

if p.style.name == "Heading 1":

print(p.text)

# Word 的页眉在“节(Section)”里面。

section = doc.sections[0] # 获取第一个节,通常就一个

header = section.header

for p in header.paragraphs:

print("页眉:", p.text)

footer = section.footer

for p in footer.paragraphs:

print("页脚:", p.text)

for table in doc.tables:

print(table) # 获取所有表格

table = doc.tables[0]

for row in table.rows:

for cell in row.cells:

print(cell.text)

cell = table.cell(1, 2) # 第2行第3列

print(cell.text)

12.3 Word Cloud (词云)

WordCloud

Install WordCloud

- Python 3.9安装问题的解决方案

- Win系统安装方式:WordCloud的安装文件下载地址

-

pip install (安装文件的绝对路径) - Mac系统安装方式:先在终端输入如下命令

-

xcode-select --installpip install wordcloud

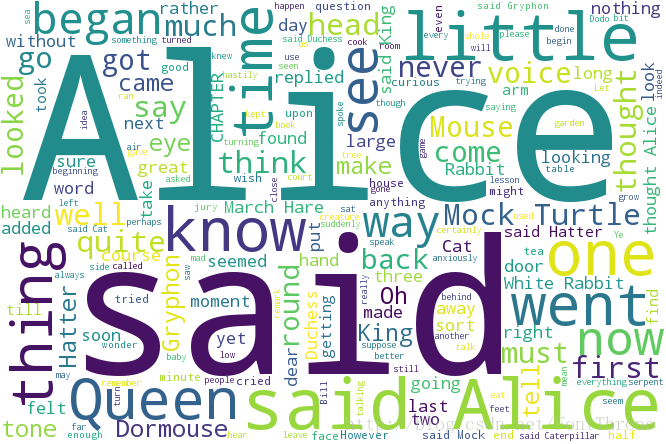

A long string

from wordcloud import WordCloud

string = 'Importance of relative word frequencies for font-size.\

With relative_scaling=0, only word-ranks are considered. With \

relative_scaling=1, a word that is twice as frequent will have \

twice the size. If you want to consider the word frequencies \

and not only their rank, relative_scaling around .5 often looks good.'

font = r'C:\Windows\Fonts\Arial.TTF'

wc = WordCloud(font_path=font, # Chinese

background_color='white',

width=1000,

height=800,

).generate(string)

wc.to_file('s1.png') # save figure

from matplotlib import pyplot as plt

plt.imshow(wc) # show figure by plt

plt.axis('off')

plt.show()

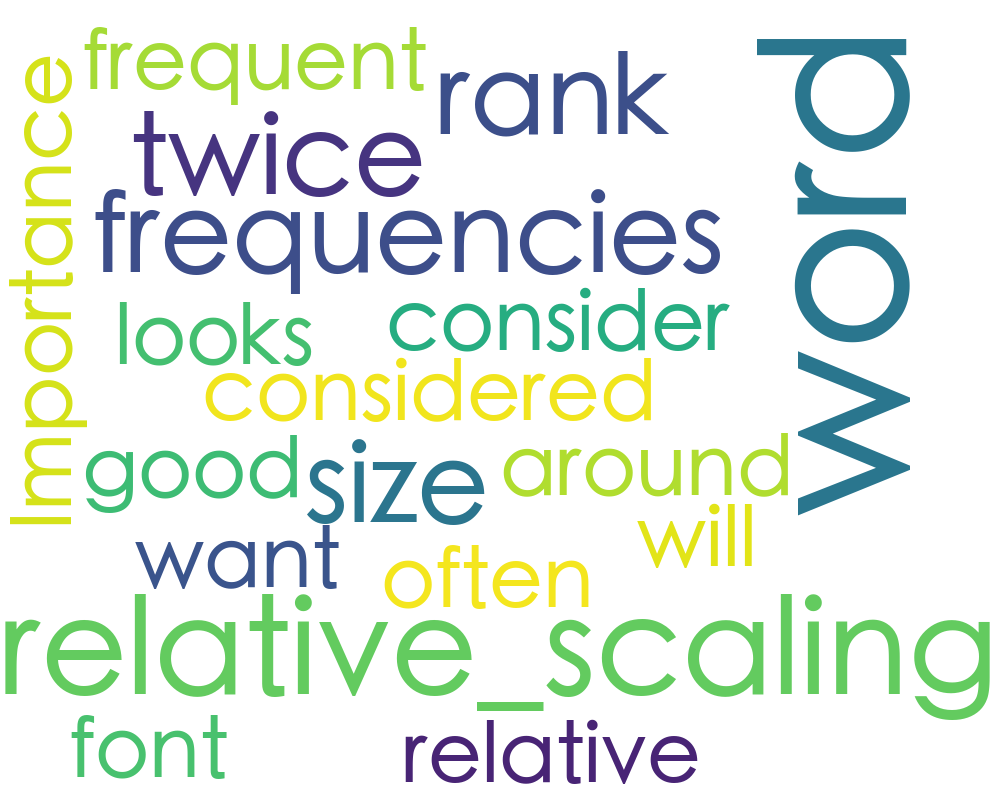

Loading text file

from wordcloud import WordCloud

filename = 'Harry Potter.txt'

with open(filename, encoding="utf-8") as f_obj:

contents = f_obj.read()

#font = r'C:\Windows\Fonts\Arial.TTF'

font = "/System/Library/Fonts/STHeiti Medium.ttc"

wc = WordCloud(font_path=font,

background_color='white',

width=1000,

height=800,

).generate(contents)

wc.to_file('s2.png')

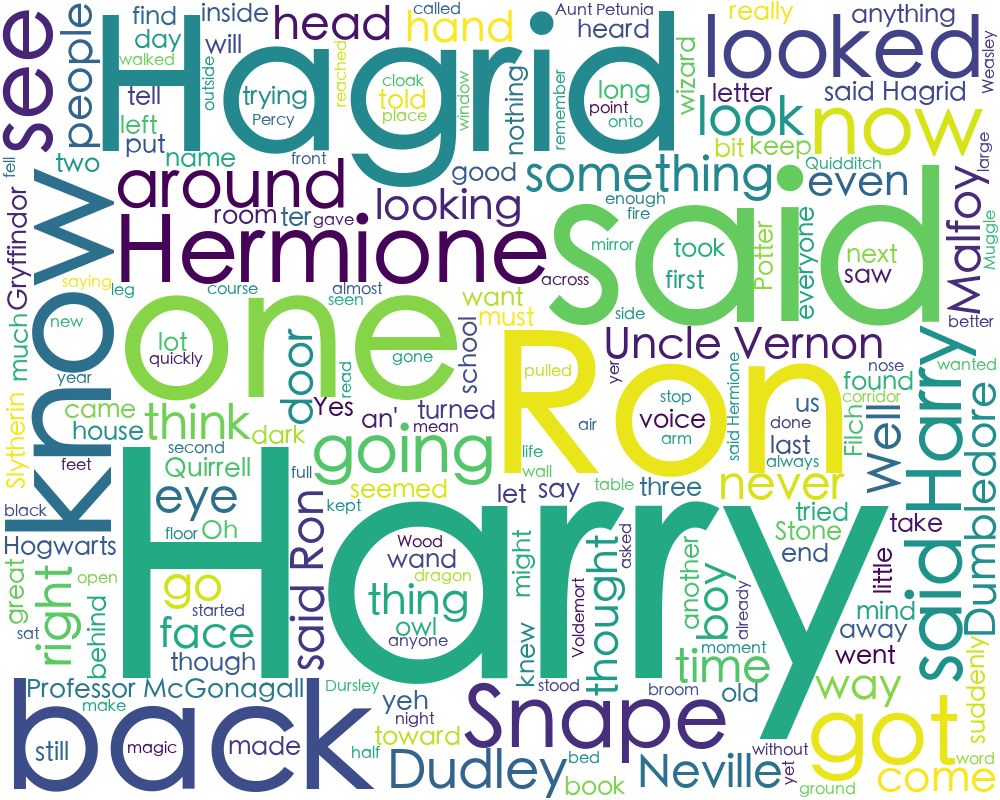

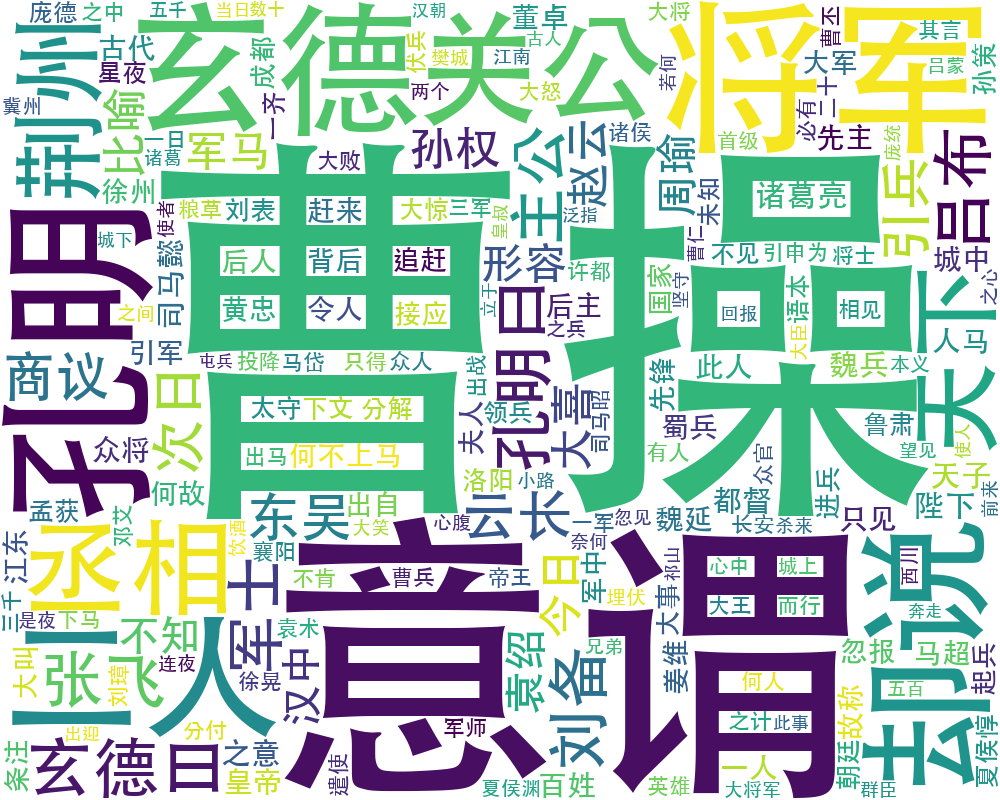

Loading Chinese text file

from wordcloud import WordCloud

filename = 'sanguo.txt'

with open(filename , encoding="utf-8") as f_obj:

contents = f_obj.read()

#font = r'C:\Windows\Fonts\Arial.TTF'

font = "/System/Library/Fonts/STHeiti Medium.ttc"

wc = WordCloud(font_path=font,

background_color='white',

width=1000,

height=800,

).generate(contents)

wc.to_file('s3.png')

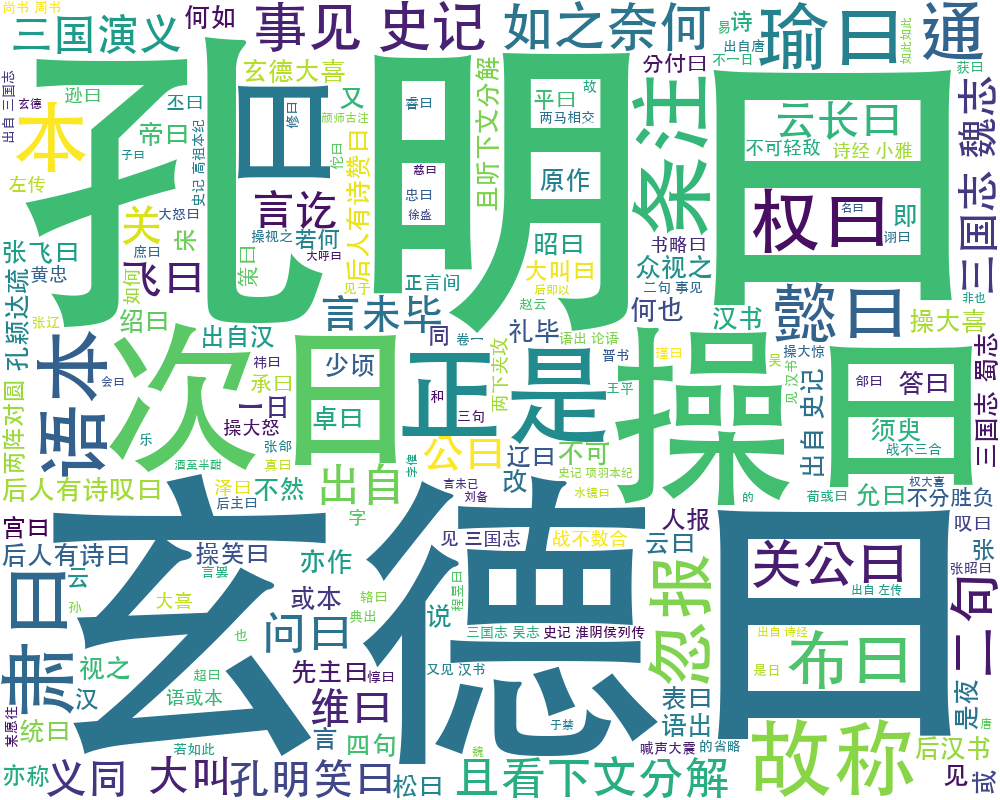

Using jieba

from wordcloud import WordCloud

import jieba

filename = 'sanguo.txt'

with open(filename , encoding="utf-8") as f_obj:

contents = f_obj.read()

s = jieba.lcut(contents)

txt = " ".join(s)

#font = r'C:\Windows\Fonts\Arial.TTF'

font = "/System/Library/Fonts/STHeiti Medium.ttc"

wc = WordCloud(font_path=font,

background_color='white',

width=1000,

height=800,

).generate(txt)

wc.to_file('s4.png')

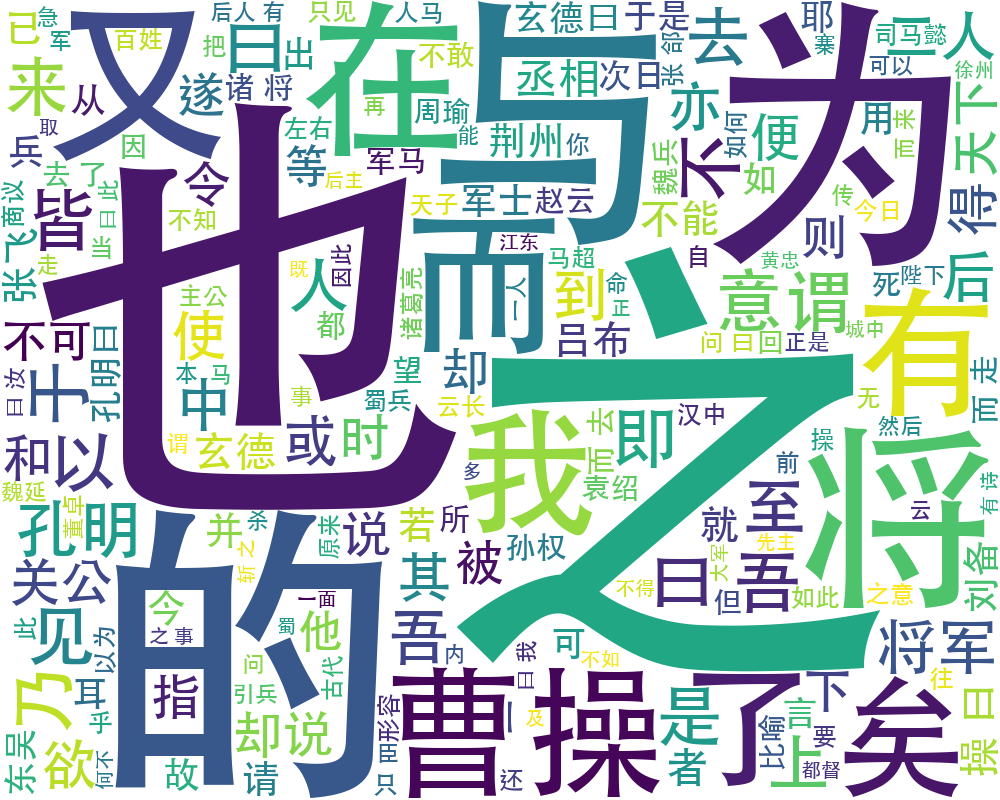

Stopwords

中文常用停用词表

from wordcloud import WordCloud

import jieba

filename = 'sanguo.txt'

with open(filename, encoding="utf-8") as f_obj:

contents = f_obj.read()

def sw(filename):

with open(filename, encoding="utf-8") as f_obj:

x = f_obj.readlines()

y = [word.strip() for word in x]

return y

name_list = ['baidu_stopwords.txt', 'cn_stopwords.txt',

'hit_stopwords.txt','scu_stopwords.txt']

stop_word = []

for x in name_list:

stop_word.extend(sw(x))

stop_word = list(set(stop_word))

s = jieba.lcut(contents)

result = [word for word in s if word not in stop_word]

s = [word for word in result if len(word)>1]

txt = " ".join(s)

#font = r'C:\Windows\Fonts\Arial.TTF'

font = "/System/Library/Fonts/STHeiti Medium.ttc"

wc = WordCloud(font_path=font,

background_color='white',

width=1000,

height=800,

).generate(txt)

wc.to_file('s5.png')

Summary

- Pandas

- Reading: Python for Data Analysis, Chapter 7

- Reading: Python Crash Course, Chapter 10.1